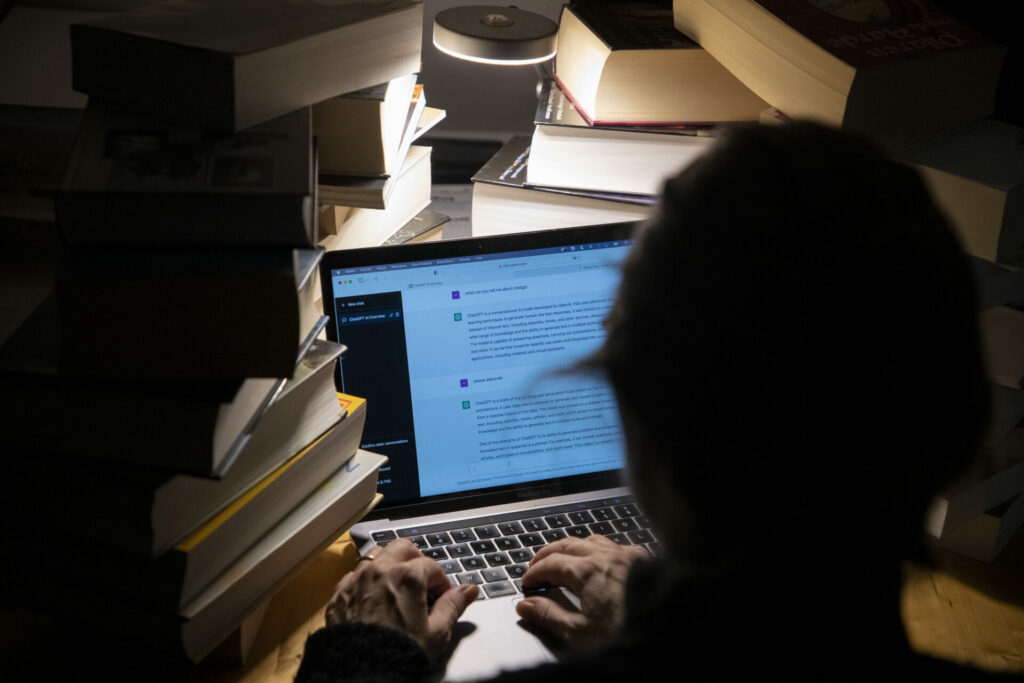

A young Belgian man recently died by suicide after talking to a chatbot named ELIZA for several weeks, spurring calls for better protection of citizens and the need to raise awareness.

"Without these conversations with the chatbot, my husband would still be here," the man's widow has said, according to La Libre. She and her late husband were both in their thirties, lived a comfortable life and had two young children.

However, about two years ago, the first signs of trouble started to appear. The man became very eco-anxious and found refuge with ELIZA, the name given to a chatbot that uses GPT-J, an open-source artificial intelligence language model developed by EleutherAI. After six weeks of intensive exchanges, he took his own life.

Last week, the family spoke with Mathieu Michel, Secretary of State for Digitalisation, in charge of Administrative Simplification, Privacy and the Regulation of Buildings. "I am particularly struck by this family's tragedy. What has happened is a serious precedent that needs to be taken very seriously," he said on Tuesday.

He stressed that this case highlights that is it "essential to clearly define responsibilities."

"With the popularisation of ChatGPT, the general public has discovered the potential of artificial intelligence in our lives like never before. While the possibilities are endless, the danger of using it is also a reality that has to be considered."

Urgent steps to avoid tragedies

To avoid such a tragedy in the immediate future, he argued that it is essential to identify the nature of the responsibilities leading to this kind of event.

"Of course, we have yet to learn to live with algorithms, but under no circumstances should the use of any technology lead content publishers to shirk their own responsibilities," he noted.

OpenAI itself has admitted that ChatGPT can produce harmful and biased answers, adding that it hopes to mitigate the problem by gathering user feedback.

In the long term, Michel noted that it is essential to raise awareness of the impact of algorithms on people's lives "by enabling everyone to understand the nature of the content people come up against online."

Here, he referred to new technologies such as chatbots, but also deep fakes – a type of artificial intelligence which can create convincing images, audio and video hoaxes – which can test and warp people's perception of reality.

Related News

- Europol warns against misuse of ChatGPT

- AI software ChatGPT almost smart enough to pass tough medical exam

Michel added that citizens must also be adequately protected from certain applications of artificial intelligence that "pose a significant risk."

The European Union is looking to regulate the use of artificial intelligence with an AI Act, which it has been working on for the past two years. Michel has set up a working group to analyse the text currently being prepared by the EU to propose the necessary adjustments.

This article was updated on Wednesday 29 March to correct the previous statement that the chatbot's technology was developed by OpenAI.